Section: New Results

Online Temporal Detection of Daily-Living Human Activities in Long Untrimmed Video Streams

Participants : Abhishek Goel, Abdelrahman G. Abubakr, Michal Koperski, Francois Brémond.

keywords: Daily-living activity recognition, Human activity detection, Video surveillance, Smarthome

Many approaches were proposed to solve the problem of activity recognition in short clipped videos, which achieved impressive results with hand-crafted and deep features. However, it is not practical to have clipped videos in real life, where cameras provide continuous video streams in applications such as robotics, video surveillance, and smart-homes. Here comes the importance of activity detection to help recognizing and localizing each activity happening in long videos. Activity detection can be defined as the ability to localize starting and ending of each human activity happening in the video, in addition to recognizing each activity label. A more challenging category of human activities is the daily-living activities, such as eating, reading, cooking, etc, which have low inter-class variation and environment where actions are performed are similar. In this work we focus on solving the problem of detection of daily-living activities in untrimmed video streams. We introduce new online activity detection pipeline that utilizes single sliding window approach in a novel way; the classifier is trained with sub-parts of training activities, and an online frame-level early detection is done for sub-parts of long activities during detection. Finally, a greedy Markov model based post processing algorithm is applied to remove false detection and achieve better results. We test our approaches on two daily-living datasets, DAHLIA and GAADRD, outperforming state of the art results by more than 10%. The proposed work has been published in [43].

The Work Flow of processing untrimmed videos is composed of three tasks:

-

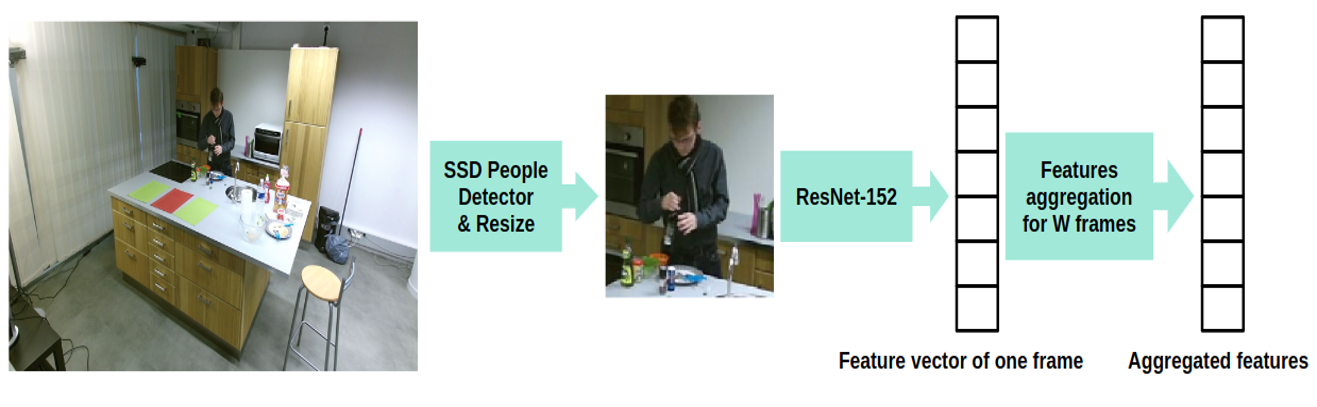

Feature extraction consists in extracting the Person-Centered CNN (PC-CNN) features as shown in fig. 18.

-

Classifier Training: All training videos are first divided into relatively small windows of size W frames, which represent activity sub-videos (subparts). Then the features are generated for all these windows and the training is done with linear SVM classifier using all activities sub-videos.

-

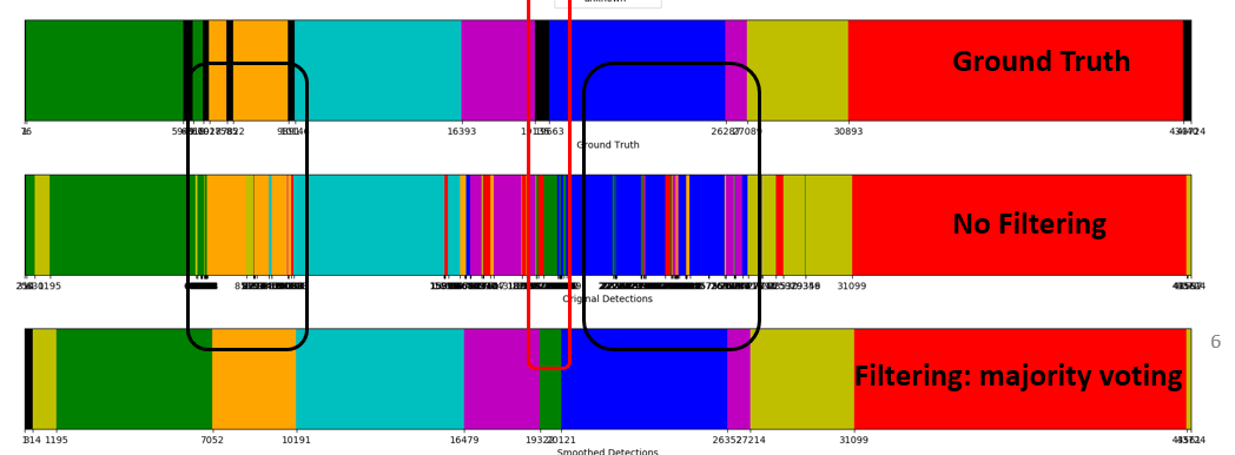

Majority voting filtering, as depicted in fig. 19, looks up for neighbors within a certain range that have the same label apply majority-voting between the labels